The Evolution of Artificial Intelligence: From Basics to Advanced Applications

2025-03-11 4:35The Evolution of Artificial Intelligence: From Basics to Advanced Applications

The Evolution of Artificial Intelligence: From Basics to Advanced Applications

Artificial Intelligence (AI) has come a long way since its inception. Initially a theoretical concept, it has evolved into one of the most transformative technologies of the modern world, with applications spanning various industries.

1. The Early Days of Artificial Intelligence

AI has roots dating back to the 1950s, with pioneers like Alan Turing, John McCarthy, and Marvin Minsky laying the groundwork for the field. Turing’s work on the Turing Test, designed to measure a machine’s ability to exhibit intelligent behavior, and McCarthy’s coining of the term “Artificial Intelligence” were pivotal moments in AI history. During this period, AI research was focused on symbolic AI, where machines performed logical reasoning through human-like decision-making.

Key Milestones:

- 1956: The Dartmouth Conference, where the term “Artificial Intelligence” was first introduced.

- 1950s-1970s: Early AI programs, such as chess-playing programs and expert systems, emerged.

2. The Rise of Machine Learning (ML)

The real breakthrough in AI came with the rise of Machine Learning (ML). While early AI systems relied on predefined rules and logic, machine learning algorithms allowed machines to learn from data and improve their performance over time without explicit programming.

This shift from rule-based systems to data-driven models laid the foundation for many of the modern applications of AI that we see today.

Key Milestones:

- 1980s: The advent of neural networks and deep learning techniques, which mimicked how the human brain processes information.

- 1990s-2000s: The development of more advanced ML algorithms, including support vector machines and decision trees.

3. Deep Learning: The Game Changer

Deep Learning, a subfield of Machine Learning, has significantly impacted AI’s capabilities. By using multi-layered neural networks, deep learning models can process vast amounts of data and extract complex patterns. These models have revolutionized fields like computer vision, natural language processing (NLP), and speech recognition.

Deep learning is the driving force behind some of the most powerful AI applications today, such as self-driving cars, facial recognition systems, and virtual assistants like Siri and Alexa.

Key Milestones:

- 2006: Geoffrey Hinton and others introduced deep belief networks, a precursor to modern deep learning.

- 2010s: Breakthroughs in convolutional neural networks (CNNs) and recurrent neural networks (RNNs) led to major advancements in image and speech recognition.

4. AI in Industry: From Research to Real-World Applications

As AI has matured, its applications have expanded into almost every sector. AI is no longer just a research field but a key technology powering real-world solutions. Some of the most prominent AI applications include:

- Healthcare: AI is revolutionizing diagnostics, drug discovery, and personalized treatment plans.

- Finance: AI is used for fraud detection, risk management, and algorithmic trading.

- Transportation: Self-driving cars and smart traffic systems use AI to improve safety and efficiency.

- Entertainment: AI-powered recommendation engines on platforms like Netflix and Spotify personalize user experiences.

- Retail: AI is used for inventory management, predictive analytics, and customer service chatbots.

5. The Future of AI: Ethical Considerations and Emerging Trends

While AI has the potential to solve many global challenges, it also raises important ethical concerns, including privacy issues, bias in algorithms, and the displacement of jobs due to automation. As AI technology continues to evolve, it will be crucial to ensure that it is developed and used responsibly.

Emerging trends in AI include:

- Explainable AI (XAI): Aiming to make AI decisions more transparent and understandable.

- AI in Creativity: AI is being used to generate art, music, and even write stories.

- General Artificial Intelligence (AGI): The pursuit of AI that can perform any intellectual task that a human can do.

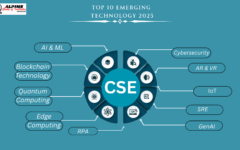

How a BTech in Computer Science Engineering Can Help You Contribute to AI

For those interested in diving into the world of AI, a BTech in Computer Science Engineering (CSE) provides a strong foundation. This program typically covers:

- Core Concepts of AI and ML: Students learn about algorithms, data structures, and the fundamentals of machine learning, which are essential for AI development.

- Mathematics and Statistics: A solid grasp of linear algebra, calculus, probability, and statistics is crucial for understanding and developing AI models.

- Programming Skills: Mastery of programming languages like Python, Java, and R is vital for implementing AI algorithms.

- Data Science and Big Data: A BTech in CSE often includes courses in data analysis and working with large datasets, which is key to building AI models.

- Hands-On Projects: Most programs offer practical experience through internships or projects that involve real-world applications of AI and machine learning.

By pursuing a BTech in CSE, students gain the knowledge and hands-on experience necessary to contribute to AI research, development, and its various applications. Graduates are well-equipped to work as data scientists, machine learning engineers, AI researchers, and more.

Conclusion

The evolution of AI, from early theoretical models to the powerful technologies of today, showcases the incredible potential of this field. As AI continues to shape industries across the globe, there has never been a better time to pursue a career in this exciting area. A BTech in Computer Science Engineering provides the foundation and skills necessary to be part of the next wave of AI innovation.